We are trying to create some applications/extensions that allow people to interact naturally with 3D built environments on a desktop by pointing at or walking up to objects in the digital environment:

or a large surround screen (figure below is of the Curtin HIVE):

using a Kinect (SDK 1 or 2) for tracking. Ideally we will be able to:

- Green screen narrator into a 3D environment (background removal).

- Control an avatar in the virtual environment using speaker’s gestures.

- Trigger slides and movies inside a UNITY environment via speaker finger-pointing Ideally the speaker could also change the chronology of built scene with gestures (or voice), could alter components or aspects of buildings, move or replace parts or components of the environment. Possibly also use Leap SDK (improved).

- Better employ the curved screen so that participants can communicate with each other.

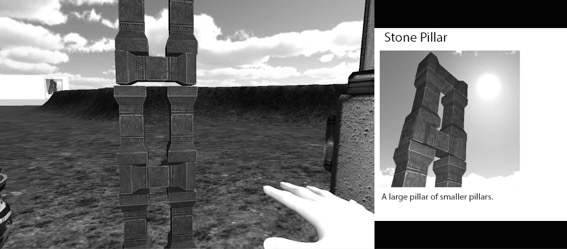

We can have a virtual/tracked hand point to objects creating an interactive slide presentation to the side of the Unity environment. As objects are pointed at information appears in a camera window/pane next to the 3D digital environment, or, these info windows are triggered on approach.

A commercial solution to Kinect tracking for use inside Unity environments is http://zigfu.com/ but they only appear to be working with SDK 1. Which is a bit of a problem, to rephrase:

Problem: All solutions seem to be Kinect SDK 1 and SDK 2 only appears to work on Windows 8. We use Windows 7 and Mac OS X (10.10.1).

So if anyone can help me please reply/email or comment on this post.

And for those doing similar things, here are some links I found on creating Kinect-tracked environments:

KINECT SDK 1

Kinect with MS-SDK is a set of Kinect examples, utilizing three major scripts and test models. It demonstrates how to use Kinect-controlled avatars or Kinect-detected gestures in your own Unity projects. This asset uses the Kinect SDK/Runtime provided by Microsoft. URL: http://rfilkov.com/2013/12/16/kinect-with-ms-sdk/

And here is “one more thing”: A great Unity-package for designers and developers using Playmaker, created by my friend Jonathan O’Duffy from HitLab Australia and his team of talented students. It contains many ready-to-use Playmaker actions for Kinect and a lot of example scenes. The package integrates seamlessly with ‘Kinect with MS-SDK’ and ‘KinectExtras with MsSDK’-packages.

NB

KinectExtras for Kinect v2 is part of the “Kinect v2 with MS-SDK“. This package here and “Kinect with MS-SDK” are for Kinect v1 only.

BACKGROUND REMOVAL (leaves just player)

rfilkov.wordpress.com/2013/12/17/kinectextras-with-mssdk/

FINGER TRACKING (Not good on current Kinect for various reasons)

- http://www.ar-tracking.com/products/interaction-devices/fingertracking/

- Not sure if SDK 1 but FingerTracker is a Processing library that does real-time finger-tracking from depth images: http://makematics.com/code/FingerTracker/

- Finger tracking for interaction in augmented environments: Finger tracking for interaction in augmented environments OR https://www.ims.tuwien.ac.at/publications/tr-1882-00e.pdf by K Dorfmüller-Ulhaas – a finger tracker that allows gestural interaction and is sim- ple, cheap, fast … is based on a marked glove, a stereoscopic tracking system and a kinematic 3-d …

- Video of “Finger tracking with Kinect SDK” see https://www.youtube.com/watch?v=rrUW-Z3fHkk

- Finger tracking using Java http://www.java2s.com/Open-Source/CSharp_Free_Code/Xbox/Download_Finger_Tracking_with_Kinect_SDK_for_XBOX.htm

- Microsoft can do it: http://www.engadget.com/2014/10/08/kinect-for-windows-finger-tracking/ Might need to contact them though for info

HAND TRACKING FOR USE WITH AN OCULUS RIFT

http://nimblevr.com/ For use with rift

Download nimble VR http://nimblevr.com/download.html Win 8 required but has mac binaries